AI‑Powered Manuscript Analysis

March 19, 2025Studio Vi

In the rapidly evolving landscape of artificial intelligence, the ability to analyze and interpret literary works has emerged as a crucial tool for publishers, authors, and researchers. Our AI-driven manuscript analysis product leverages natural language processing (NLP) and deep learning techniques to extract meaningful insights from books, articles, and other written content. This article outlines the technical foundations of BetterStories, the challenges of building it, and how we tackled them.

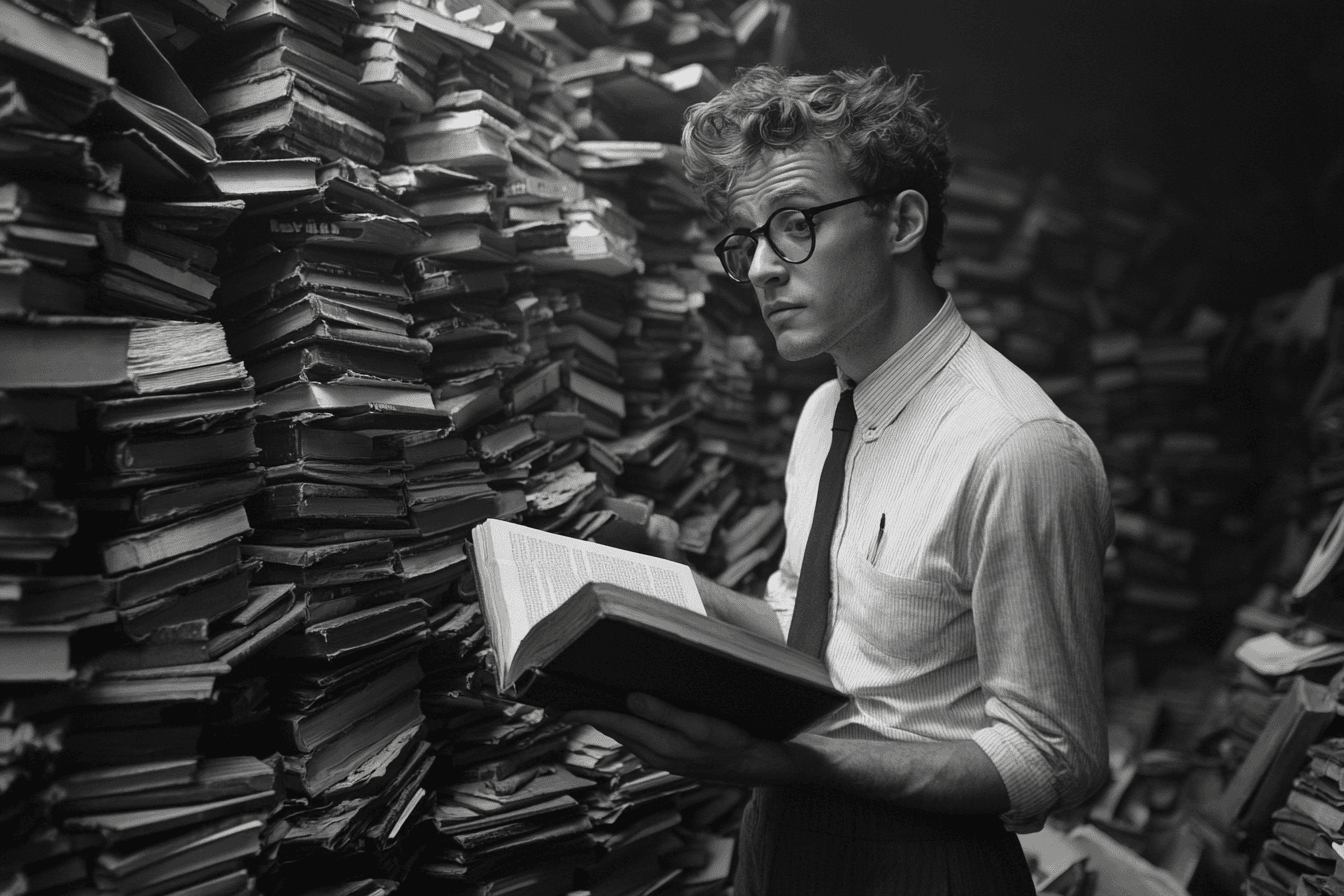

Too many manuscripts, too little time

Publishers are burdened by the large number of manuscripts they receive, often leading to significant challenges in identifying promising works. Amid this overwhelming pile, some manuscripts with interesting plots and themes may be overlooked entirely. The process of reviewing these manuscripts is not only time-consuming but also inefficient, as only 5% of submissions typically progress past the initial selection stage. This inefficiency results in missed opportunities for discovering hidden gems and requires a more streamlined and effective approach to manuscript evaluation.

AI-driven literary insight

Our AI-driven manuscript analysis platform transforms the traditional evaluation process by integrating natural language processing (NLP) and deep learning techniques. This comprehensive solution not only automates the tedious aspects of manuscript review but also provides nuanced insights that empower publishers, authors, and researchers to make data-driven decisions.

- Emotional and sentiment analysis with variation mapping: Our solution delves deep into the manuscript to track emotional intensity and sentiment trends throughout the text. Instead of providing a simple positive or negative label, it maps subtle shifts in mood and tone, helping users pinpoint moments of dramatic change or emotional peaks that could signal key turning points in the narrative.

- Automated spellcheck and readability scoring: Beyond basic spellchecking, our tool integrates a robust readability scoring system that assesses sentence structure, vocabulary complexity, and overall clarity. This dual-function approach ensures that manuscripts are not only free of elementary errors but also refined for target audiences.

- Topic modeling and theme detection: Employing sophisticated topic modeling techniques, the AI automatically identifies dominant themes and recurring topics within the manuscript. This feature provides an overarching view of the narrative, unveiling subtle motifs and narrative threads. These insights assist in aligning content with current literary trends and market demands, ensuring that compelling stories do not slip through the cracks

- Quality of writing score: By analyzing coherence, grammar, and stylistic elements, the system generates a quality score that helps authors and publishers assess the manuscript’s overall readability and engagement level.

Building a high-performance platform

To deliver these AI-driven insights seamlessly, we developed a robust, modern platform that optimizes both user experience and scalability:

- React and Next.js for server-side rendering (SSR): By leveraging Next.js on top of React, we ensure fast page loads and efficient rendering. This setup enhances the platform’s performance and SEO, making it more accessible to a global audience.

- Formik and firebase: Formik handles form management and validation, simplifying user input processes and reducing development complexity. Meanwhile, Firebase provides a secure, scalable backend solution for storing user data and managing real-time updates.

- Subscription model with stripe: A flexible subscription model powered by Stripe enables monetization and easy scaling. Users can choose from various plans, ensuring that publishers and authors only pay for the features they need.

- Full Authentication and User Profiles: The platform integrates a robust authentication system, supporting both traditional email logins and Google sign-on. Each user has a dedicated profile with controlled upload limits, helping manage resources efficiently. A personal library of uploaded manuscripts keeps content organized and easily accessible for future reference.

This combination of advanced AI-driven manuscript analysis and a high-performance platform creates a holistic environment for manuscript evaluation, enabling publishers, authors, and researchers to make well-informed, data-backed decisions with minimal friction.

Vidar Daniels Digital Director

Ready to dive deeper?

Challenges & implementation

- Choosing the right model: Millions vs. Billions of parameters?

The current market focus in AI is dominated by large-scale generative models such as GPT-3 and Gemini, which boast billions of parameters. However, for our tasks, specifically for emotional analysis—these large models do not necessarily yield superior performance. Instead, we opted for Google’s BERT, a transformer-based model that has been fine-tuned for sentiment and emotion analysis.For topic extraction, we implemented BERTopic, which required additional fine-tuning to align with literary content analysis. This approach allowed us to maintain efficiency without compromising accuracy.For topic extraction, we implemented BERTopic, which required additional fine-tuning to align with literary content analysis. This approach allowed us to maintain efficiency without compromising accuracy. - Dutch language is a challenge

One of the most significant hurdles we faced was the limited support for the Dutch language in AI models. While AI advancements continue at a rapid pace, dedicated Dutch-language models remain underdeveloped. To address this, we implemented an aggregation approach, where results from smaller text chunks were combined to form a more accurate overall analysis. This method allowed us to mitigate inconsistencies that arise from analyzing individual chunks in isolation, ensuring a more reliable assessment of Dutch-language manuscripts. Further, with multilingual LLMs, Dutch is often highly underrepresented, which ruled them out as an option. - Efficient deployment at scale

Given the large size of manuscripts, processing them efficiently posed a challenge. To address this, we deployed our solution on an Azure Virtual Machine equipped with an NVIDIA T4 GPU, enabling accelerated inference. Since manuscripts contain substantial amounts of text, we implemented a chunking and batch-processing strategy. By dividing the manuscripts into smaller segments, we ensured that our AI models could process them quickly while maintaining responsiveness. Opting for BERT based models also meant we could conveniently host the entire application on our VM without excessive memory or RAM requirements. This further guarantees that your stories never leave our environment and are protected.

To provide an intuitive user experience, we integrated the system with a user-friendly dashboard, enabling users to upload manuscripts and receive detailed analytical reports based on these advanced techniques.

Conclusion: a smarter way to evaluate manuscripts

Our tool is aimed at giving a quantitative analysis of the dump pile, allowing the publishers to filter out the works with higher potential of success and in line with the publishing house’s proposition.

By combining the power of deep learning with linguistic expertise, it provides authors, publishers, and researchers with actionable insights, ensuring higher quality and efficiency in literary production. Future enhancements will include expanded multilingual support and integration with AI-assisted content generation tools.

Next steps

We are continuously refining our models and implementing new features that are of value to our users. Interested publishers and researchers can contact us for a demo and pilot testing opportunities.

How can AI help the construction industry achieve its sustainability goals?

AI plays an increasingly important role in construction project planning by automating complex processes, predicting costs, and identifying risks early.